Pizza-making robots are already here, and taking a pizza order is trivial for any half-decent chatbot. But if you’re waiting for a robot to deliver your ‘za, you may be waiting for a while.

It’s not because autonomous navigation technology doesn’t exist — it’s that the data set required to run it is too specific. Digital maps can lead a robot to your driveway, but there are no detailed directions from the curb to your door.

Currently, robots rely on humans to manually map the environments in which they work. But mapping every driveway in the United States would be a tall order. That may not be necessary anymore, however, thanks to a team of engineers at MIT.

Led by fifth-year graduate student Michael Everett, the team created a new autonomous navigation method that doesn’t require mapping out a neighborhood ahead of time. Instead, their technology allows robots to find front doors like we do, using visual cues like driveways, hedges and walkways as contextual clues to find their destinations.

Everett presented a white paper co-authored by his advisor Jonathan How, and fellow researcher Justin Miller, who now works for Ford Motor Company, in November at international conferences in Asia. The team’s findings are about more than food delivery, however: it’s an attempt at solving the “last-mile” riddle that has long plagued AI developers.

Breaking the problem into manageable pieces

Everett first came across robotics’ last-mile problem last year while studying how drones carried out simulated package deliveries. Before running each experiment, he had to spend 10 minutes manually mapping the area out, and then provide the drone with the exact coordinates to complete a package delivery.

While it was a minor inconvenience for him, he realized it would be nearly impossible for a drone to navigate a suburban neighborhood on its own, let alone an entire city. That challenge has served as one of the biggest obstacles preventing Amazon and other e-commerce companies from using robots to deliver packages.

“If you’re mapping one place, it’s worth the investment,” Everett said. “But as soon as people start asking if we can do it at the scale of a city, that’s millions of dollars invested in time to manually make that map.”

There’s also no way to make sure a map is always up to date, as new condos, streets and shops pop up to change the city landscape.

Everett partnered with Miller and his advisor, How, to find a new approach. Rather than create an end-to-end algorithm that would require them to write everything from scratch, the team decided to break the problem up into a modular architecture. This would allow them to home in on the missing pieces that would prevent a robot from finding the front door.

Researchers had already found ways to teach robot’s semantic concepts — for instance, recognizing that the image of a door is identified as a “door,” and a driveway is a “driveway.” This meant that a robot could be trained to understand a command like, “Go find the door.” The team used that method to create a semantic, simultaneous localization and mapping algorithm, which turns the information collected by the robot’s sensors into a map.

It became, ‘How can we compile a data set that actually contained information structure for the environment the robot is in? Does that data exist, and can we use it?’”

That map would then feed into another algorithm that could calculate a trajectory to the destination, which would then inform the controller system that tells the robot where to move.

Still, those algorithms didn’t allow the robot to make decisions in real-time like we do. What was missing was an algorithm that could place the images a robot processed into context, which in turn could inform the planning system.

That’s what Everett and Miller set out to create. They just needed the data to train it.

“It became, ‘How can we compile a data set that actually contained information structure for the environment the robot is in? Does that data exist, and can we use it?’” Everett said.

Teaching a robot what a driveway looks like

Turns out, the researchers didn’t need to travel to subdivisions around the country to collect data on front yards.

Instead, they turned to the satellite images on Bing Maps, which gave them a trove of localized neighborhood data without requiring them to leave their workstations. (Google doesn’t allow its map data to be used in research.)

The researchers captured images of 77 front yards in Massachusetts, Ohio and Michigan. Since they knew they’d be testing the software in a simulated suburban neighborhood, they focused on residential streets in three suburban neighborhoods, and one urban one.

They also had to make decisions on what is universal to a front yard. Not every lawn is green, and not every driveway is white concrete, but there are similarities in shapes.

There aren’t that many obstacles that will block a robot in a front yard, but there are enough objects that are a good enough clue to indicate what direction to head.”

So, using the open-source annotation tool Label Me, the researchers isolated the polygonal shapes of things like driveways, mailboxes, hedges and cars and named them. They also applied masks to the shapes to recreate the partial views a robot experiences lower to the ground. In total, each house took about 15 minutes, Everett said, but the information was valuable.

But simply identifying images doesn’t tell the robot where to go in real-time. The researchers also needed to interpret what those objects meant in relation to the robot’s goal.

Building the missing link

Using a technique called image-to-image translation, the researchers layered a second polygon on top of each object and assigned it a color, like yellow for a sidewalk. Having a second set of data allowed the robot to turn the images it sees into a map, Everett said.

This allows it to identify an object, say a driveway, and then to place it into the proper context as a route it can travel to find the door all in real-time and without needing to see the house first. Other objects, like cars or grass, will be coded as obstacles.

“There aren’t that many obstacles that will block a robot in a front yard, but there are enough objects that are a good enough clue to indicate what direction to head,” Everett said. “If you see a driveway versus a road, you will know to turn toward the driveway.”

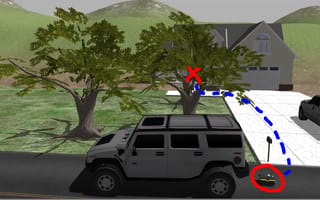

From there, they created an algorithm they called cost-to-go, which allows a robot to calculate in real-time the best route to the front door. This enables any robot equipped with a camera to take a semantically labeled map of a person’s house and turn it into a heat map.

There’s a lot of uncertainty about how all this will fit together. So you have to go out on a limb and try stuff.”

The algorithm first sorts the color-coded map into traversable and non-traversable objects. Things that it can’t drive on, such as the lawn or a car, are labeled bright red, while everything else is turned into a gray-scale. The robot then makes another calculation using the context of its image data to estimate the likeliest path to the front door, with a possible paths shaded from dark gray to light gray — light gray being more optimal.

The researchers gambled that the semantic map and the heat map could inform each other and allow the system to predict its destination without first exploring or being given coordinates. Of course, they had no idea if their solution would even work.

“There’s a lot of uncertainty about how all this will fit together,” Everett said. “So you have to go out on a limb and try stuff.”

Simulating door-to-door service

With the algorithms in place, Everett tested it in a simulated suburban yard.

The home and yard simulations were randomly generated so that the system could be tested objectively. The researchers then ran their robot system against one without a cost-to-go simulator, and in the end, their robot found the front door 189 percent faster than the other robot.

Their success proved how robots can be trained on static data and use context to find their goals, even if they haven’t been seen that location before.

For me, it’s just extremely cool to see a robot make decisions on its own and plan intelligently how to do things you want it to do without having to tell it how to do it.”

After presenting the white paper in Asia, Everett said he is working closely with Miller at Ford Motor Labs to take steps toward scaling the algorithm. They still need to address various infrastructure challenges like steps, as well as train the model on more data and build robots with the ability to complete autonomous delivery.

Still, the opportunities are endless, Everett said. The algorithm could allow robots to be trained to navigate any geographies, like hotel hallways or inside restaurants. And one day soon, a robot may finally be able to deliver that pizza to your door before it gets cold.

“For me, it’s just extremely cool to see a robot make decisions on its own and plan intelligently how to do things you want it to do without having to tell it how to do it,” Everett said. “It’s just one of the coolest things ever.”

.jpeg)